Thoughts on Neuroscience, AI and Transformers

I've always found the terminology of "neural networks" in AI to be a bit misleading. As I explain in other posts, the essence of deep learning models is the formulation of a multilayered composite function that fits training data. The analogy with neuroscience really is not needed and typically feels forced to me. With that said, I came across a very interesting 2023 paper by IBM and MIT called Building Transformers from Neurons and Astrocytes (Kozachkov et al, 2023) which describes a possible relationship between the mathematics of AI transformers and a specific class of neurons in the brain called astrocytes. The purpose of this post is to summarize my notes and thoughts on that paper.

- The most common type of neurons in the brain are glial cells. Astrocytes are a subset of glial cells that are involved in memory and learning. They communicate with other neurons in a manner suggested to resemble AI Transformers. The authors "hypothesize that that neuron– astrocyte networks can naturally implement the core computation performed by the Transformer block in AI."

- To test their hypothesis, the authors implement an artificial astrocyte-neuron circuit that approximates AI Transformers and calculate the relative error between each of these computations. This approach seems reasonable, but is a bit suspect to me since what is really being considered is a deliberately constructed astrocyte-like/transformer-like circuit rather than the real neurological system itself. With that said, science is an incremental process, so I still appreciate this work-- if one can show it is possible to construct a biologically-inspired circuit that approximates AI Transformers and produces consistent results, that is still an interesting result!

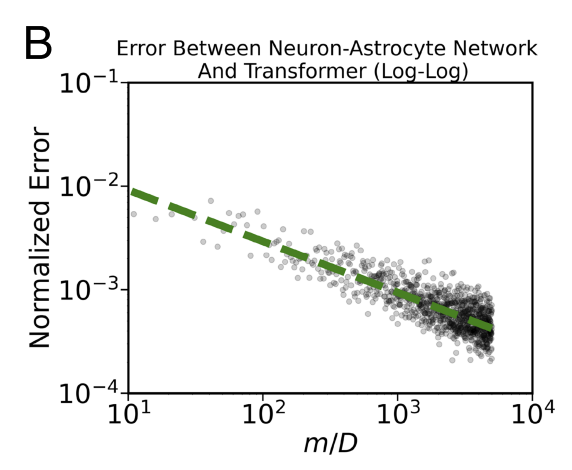

- Figure 2B is the key result that calculates the difference in results between AI Transformers and the counterpart neural circuit. It suggests that for large neuron-astrocyte networks (as measured by the number of hidden units m), the match with Transformers gets better. What I would really love to see is the counterpart error plot for a real astrocyte network vs Transformers. (Or equivalently, validation of the constructed astrocyte-like circuit against real biological astrocyte data.)

- Figure 3 is a nice snapshot of results as well as it visualizes the results from the astrocyte-style circuit superposed on Transformer results in the form of line plots. It is manifest here that big astrocyte-style circuits really do appear to agree well with Transformers on language and vision problems (whereas small ones do not). However, one must wonder if there is an over-fitting type of situation arising where the authors have specifically constructed astrocyte-network to resemble Transformers and moreover provided a large number of tunable weights (the hidden units m) in order to generate the suitable agreement.References:

AI transformers shed light on the brain’s astrocytes | IBM Research Blog

Building transformers from neurons and astrocytes | PNAS

The Large Associative Memory Problem in Neurobiology and Machine Learning 2008.06996.pdf (arxiv.org)

Comments

Post a Comment