Building Towards Computer Use with Anthropic

In my last blog post, I wrote about how AI agents function. Essentially, they are groups of LLMs whose outputs become each other's inputs in a manner that enables the solution of tasks; individual LLMs can also serve as individual agents that utilize tools (such as computer apps or functions in code) to complete tasks. For example, AI agents can be used to perform tasks on computers that we would normally associate with developers or human workers such as writing code or working with software applications. DeepLearning.AI's recent short course on Building Towards Computer Use with Antrophic provides a window into those possibilities; I am using this blog post for taking notes.

Introduction:

Anthropic uses LLMs to initiate mouse-clicks and keystrokes in agentic workflows to accomplish computer tasks. Anthropic provides API for agents. Anthropic's models take 200,000 input tokens or 500 pages of text which enables long form prompts and memory-caching in prompts. Loosely, one might analogize this to having 500 pages worth of notes for a human worker to utilize in the problem solving process (en tandem with the stored reservoir of knowledge in their brain); pushing the analogy further, those notes would be utilized for tracking and coordinating group work in the context of agentic workflows.

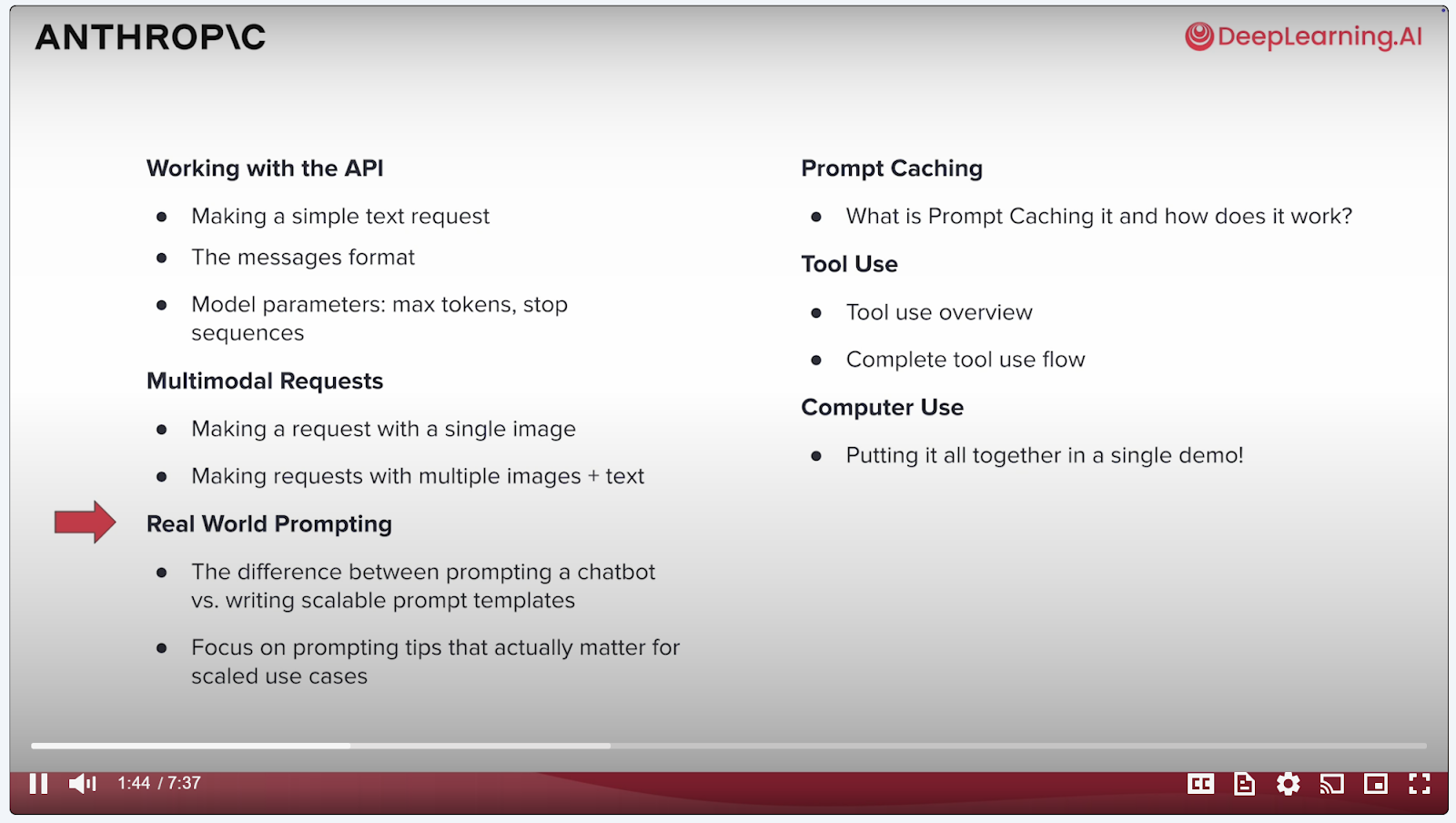

Overview:

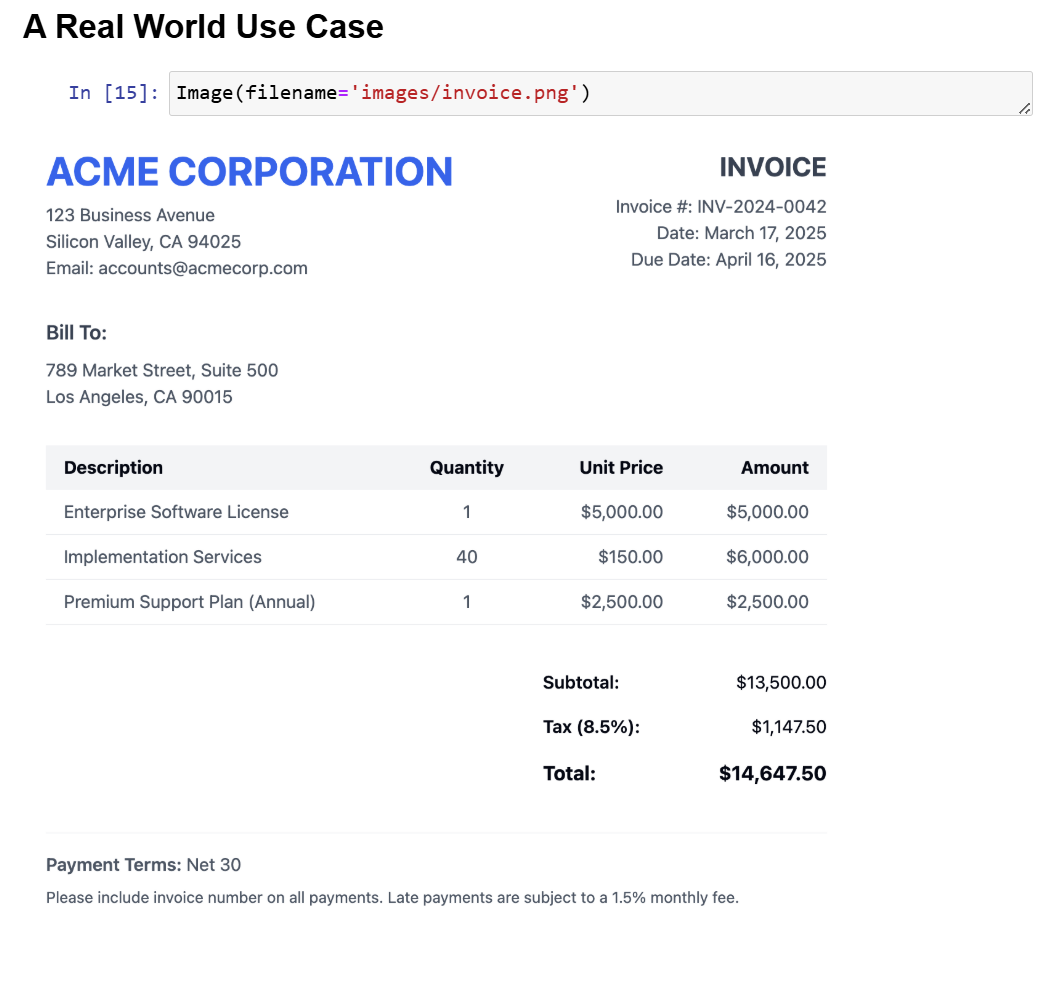

The Claude model is multimodal, so it can be prompted by the user to assist with image data as well as text data.

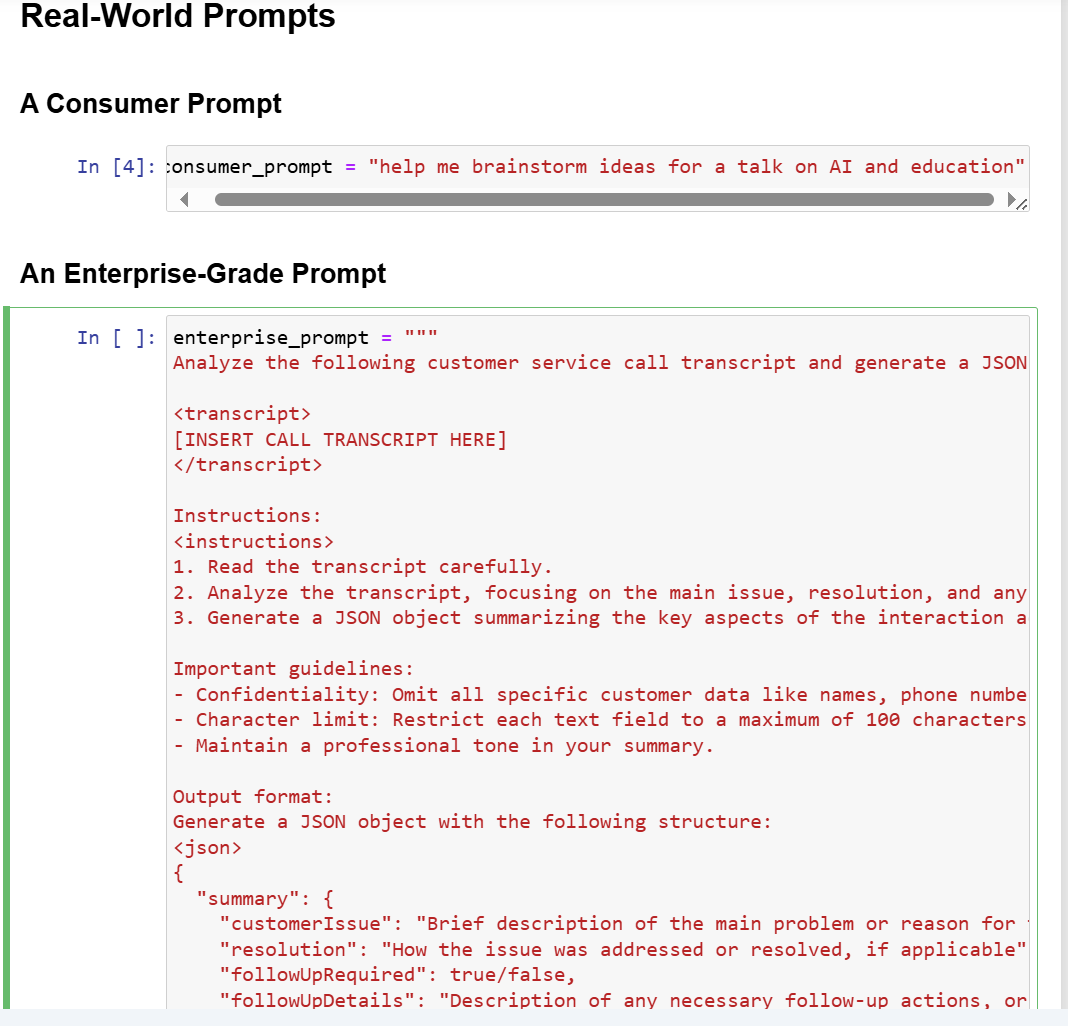

Real World Prompting:

Everyday consumer use of LLMs like ChatGPT in the browser suggest LLMs can be prompted in a casual and loose manner. This can work for one-off use cases by consumers, but there is also a place for more systematic and scalable enterprise level prompting which involves much longer and structured prompts. For example, prompting an LLM to write meeting/call notes or summarizing sentiments of product reviews.

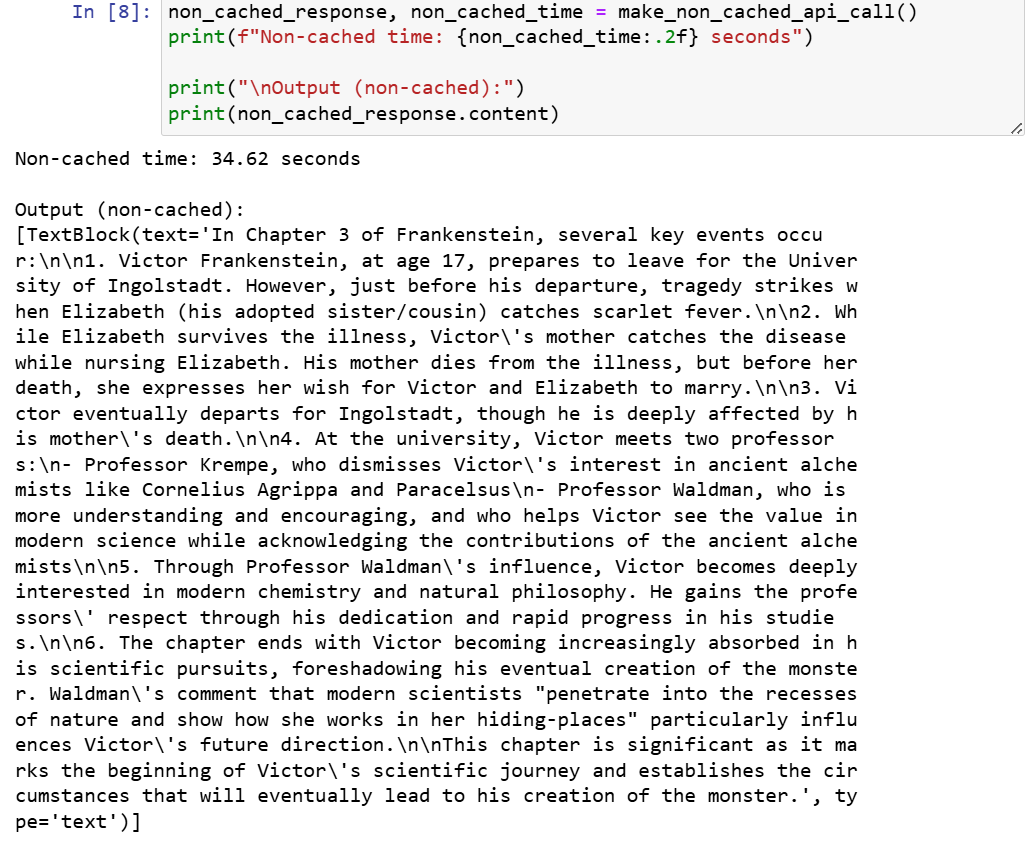

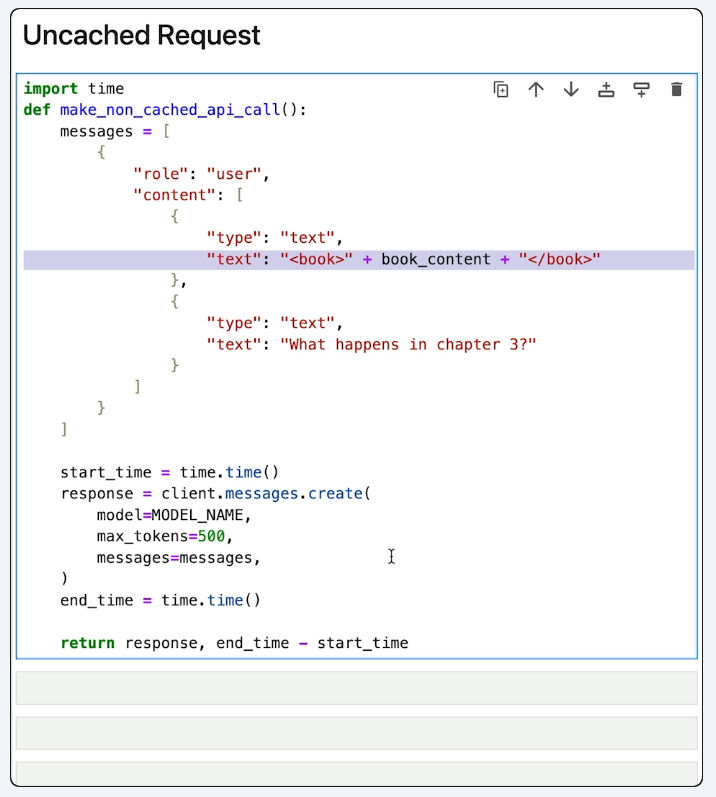

Prompt Caching:

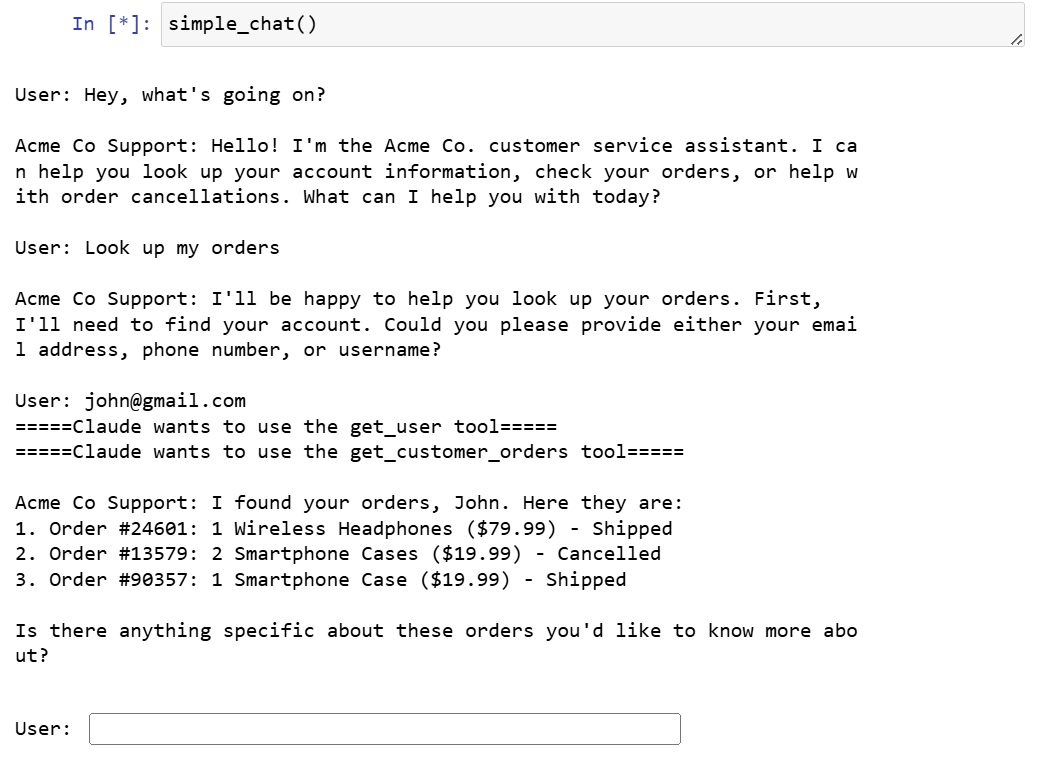

Tool Use (Function Calling):

Computer Use:

Comments

Post a Comment